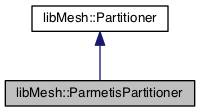

Partitioner which provides an interface to ParMETIS. More...

#include <parmetis_partitioner.h>

Public Member Functions | |

| ParmetisPartitioner () | |

| ParmetisPartitioner (const ParmetisPartitioner &other) | |

| ParmetisPartitioner & | operator= (const ParmetisPartitioner &)=delete |

| ParmetisPartitioner (ParmetisPartitioner &&)=default | |

| ParmetisPartitioner & | operator= (ParmetisPartitioner &&)=default |

| virtual | ~ParmetisPartitioner () |

| virtual std::unique_ptr< Partitioner > | clone () const override |

| virtual void | partition (MeshBase &mesh, const unsigned int n) |

| virtual void | partition (MeshBase &mesh) |

| virtual void | partition_range (MeshBase &, MeshBase::element_iterator, MeshBase::element_iterator, const unsigned int) |

| void | repartition (MeshBase &mesh, const unsigned int n) |

| void | repartition (MeshBase &mesh) |

| virtual void | attach_weights (ErrorVector *) |

Static Public Member Functions | |

| static void | partition_unpartitioned_elements (MeshBase &mesh) |

| static void | partition_unpartitioned_elements (MeshBase &mesh, const unsigned int n) |

| static void | set_parent_processor_ids (MeshBase &mesh) |

| static void | set_node_processor_ids (MeshBase &mesh) |

| static void | processor_pairs_to_interface_nodes (MeshBase &mesh, std::map< std::pair< processor_id_type, processor_id_type >, std::set< dof_id_type >> &processor_pair_to_nodes) |

| static void | set_interface_node_processor_ids_linear (MeshBase &mesh) |

| static void | set_interface_node_processor_ids_BFS (MeshBase &mesh) |

| static void | set_interface_node_processor_ids_petscpartitioner (MeshBase &mesh) |

Protected Member Functions | |

| virtual void | _do_repartition (MeshBase &mesh, const unsigned int n) override |

| virtual void | _do_partition (MeshBase &mesh, const unsigned int n) override |

| virtual void | build_graph (const MeshBase &mesh) override |

| void | single_partition (MeshBase &mesh) |

| void | single_partition_range (MeshBase::element_iterator it, MeshBase::element_iterator end) |

| virtual void | _find_global_index_by_pid_map (const MeshBase &mesh) |

| void | assign_partitioning (const MeshBase &mesh, const std::vector< dof_id_type > &parts) |

Protected Attributes | |

| ErrorVector * | _weights |

| std::unordered_map< dof_id_type, dof_id_type > | _global_index_by_pid_map |

| std::vector< dof_id_type > | _n_active_elem_on_proc |

| std::vector< std::vector< dof_id_type > > | _dual_graph |

| std::vector< Elem * > | _local_id_to_elem |

Static Protected Attributes | |

| static const dof_id_type | communication_blocksize = 1000000 |

Private Member Functions | |

| void | initialize (const MeshBase &mesh, const unsigned int n_sbdmns) |

Private Attributes | |

| std::unique_ptr< ParmetisHelper > | _pmetis |

Detailed Description

Partitioner which provides an interface to ParMETIS.

The ParmetisPartitioner uses the Parmetis graph partitioner to partition the elements.

- Date

- 2003

Definition at line 47 of file parmetis_partitioner.h.

Constructor & Destructor Documentation

◆ ParmetisPartitioner() [1/3]

| libMesh::ParmetisPartitioner::ParmetisPartitioner | ( | ) |

Default and copy ctors.

◆ ParmetisPartitioner() [2/3]

| libMesh::ParmetisPartitioner::ParmetisPartitioner | ( | const ParmetisPartitioner & | other | ) |

◆ ParmetisPartitioner() [3/3]

|

default |

Move ctor, move assignment operator, and destructor are all explicitly inline-defaulted for this class.

◆ ~ParmetisPartitioner()

|

virtual |

The destructor is out-of-line-defaulted to play nice with forward declarations.

Member Function Documentation

◆ _do_partition()

|

overrideprotectedvirtual |

Partition the MeshBase into n subdomains.

Implements libMesh::Partitioner.

◆ _do_repartition()

|

overrideprotectedvirtual |

Parmetis can handle dynamically repartitioning a mesh such that the redistribution costs are minimized. This method takes a previously partitioned mesh (which may have then been adaptively refined) and repartitions it.

Reimplemented from libMesh::Partitioner.

Definition at line 93 of file parmetis_partitioner.C.

References mesh, libMesh::MIN_ELEM_PER_PROC, libMesh::out, libMesh::Partitioner::partition(), and libMesh::MetisPartitioner::partition_range().

◆ _find_global_index_by_pid_map()

|

protectedvirtualinherited |

Construct contiguous global indices for the current partitioning. The global indices are ordered part-by-part

Definition at line 907 of file partitioner.C.

References libMesh::Partitioner::_global_index_by_pid_map, libMesh::Partitioner::_n_active_elem_on_proc, libMesh::as_range(), libMesh::MeshTools::create_bounding_box(), libMesh::MeshCommunication::find_local_indices(), mesh, and libMesh::Parallel::sync_dofobject_data_by_id().

Referenced by libMesh::Partitioner::build_graph().

◆ assign_partitioning()

|

protectedinherited |

Assign the computed partitioning to the mesh.

Definition at line 1113 of file partitioner.C.

References libMesh::Partitioner::_global_index_by_pid_map, libMesh::Partitioner::_n_active_elem_on_proc, data, mesh, and libMesh::Parallel::pull_parallel_vector_data().

◆ attach_weights()

|

inlinevirtualinherited |

Attach weights that can be used for partitioning. This ErrorVector should be exactly the same on every processor and should have mesh->max_elem_id() entries.

Reimplemented in libMesh::MetisPartitioner.

Definition at line 203 of file partitioner.h.

◆ build_graph()

|

overrideprotectedvirtual |

Build the graph.

Reimplemented from libMesh::Partitioner.

Definition at line 388 of file parmetis_partitioner.C.

References mesh.

◆ clone()

|

inlineoverridevirtual |

- Returns

- A copy of this partitioner wrapped in a smart pointer.

Implements libMesh::Partitioner.

Definition at line 79 of file parmetis_partitioner.h.

◆ initialize()

|

private |

Initialize data structures.

Definition at line 214 of file parmetis_partitioner.C.

References libMesh::MeshTools::create_bounding_box(), end, libMesh::MeshCommunication::find_global_indices(), libMesh::DofObject::id(), mesh, and std::min().

◆ operator=() [1/2]

|

delete |

This class contains a unique_ptr member, so it can't be default copy assigned.

◆ operator=() [2/2]

|

default |

◆ partition() [1/2]

|

virtualinherited |

Partitions the MeshBase into n parts by setting processor_id() on Nodes and Elems.

- Note

- If you are implementing a new type of Partitioner, you most likely do not want to override the partition() function, see instead the protected virtual _do_partition() method below. The partition() function is responsible for doing a lot of libmesh-internals-specific setup and finalization before and after the _do_partition() function is called. The only responsibility of the _do_partition() function, on the other hand, is to set the processor IDs of the elements according to a specific partitioning algorithm. See, e.g. MetisPartitioner for an example.

Definition at line 57 of file partitioner.C.

References libMesh::Partitioner::_do_partition(), libMesh::MeshTools::libmesh_assert_valid_remote_elems(), mesh, std::min(), libMesh::Partitioner::partition_unpartitioned_elements(), libMesh::Partitioner::set_node_processor_ids(), libMesh::Partitioner::set_parent_processor_ids(), and libMesh::Partitioner::single_partition().

Referenced by _do_repartition(), and libMesh::Partitioner::partition().

◆ partition() [2/2]

|

virtualinherited |

Partitions the MeshBase into mesh.n_processors() by setting processor_id() on Nodes and Elems.

- Note

- If you are implementing a new type of Partitioner, you most likely do not want to override the partition() function, see instead the protected virtual _do_partition() method below. The partition() function is responsible for doing a lot of libmesh-internals-specific setup and finalization before and after the _do_partition() function is called. The only responsibility of the _do_partition() function, on the other hand, is to set the processor IDs of the elements according to a specific partitioning algorithm. See, e.g. MetisPartitioner for an example.

Definition at line 50 of file partitioner.C.

References mesh, and libMesh::Partitioner::partition().

◆ partition_range()

|

inlinevirtualinherited |

Partitions elements in the range (it, end) into n parts. The mesh from which the iterators are created must also be passed in, since it is a parallel object and has other useful information in it.

Although partition_range() is part of the public Partitioner interface, it should not generally be called by applications. Its main purpose is to support the SubdomainPartitioner, which uses it internally to individually partition ranges of elements before combining them into the final partitioning. Most of the time, the protected _do_partition() function is implemented in terms of partition_range() by passing a range which includes all the elements of the Mesh.

Reimplemented in libMesh::CentroidPartitioner, libMesh::SFCPartitioner, libMesh::MappedSubdomainPartitioner, libMesh::LinearPartitioner, and libMesh::MetisPartitioner.

Definition at line 127 of file partitioner.h.

◆ partition_unpartitioned_elements() [1/2]

|

staticinherited |

These functions assign processor IDs to newly-created elements (in parallel) which are currently assigned to processor 0.

Definition at line 187 of file partitioner.C.

References mesh.

Referenced by libMesh::Partitioner::partition(), and libMesh::Partitioner::repartition().

◆ partition_unpartitioned_elements() [2/2]

|

staticinherited |

Definition at line 194 of file partitioner.C.

References libMesh::as_range(), libMesh::MeshTools::create_bounding_box(), end, libMesh::MeshCommunication::find_global_indices(), mesh, and libMesh::MeshTools::n_elem().

◆ processor_pairs_to_interface_nodes()

|

staticinherited |

On the partitioning interface, a surface is shared by two and only two processors. Try to find which pair of processors corresponds to which surfaces, and store their nodes.

Definition at line 421 of file partitioner.C.

References libMesh::DofObject::invalid_processor_id, std::max(), mesh, std::min(), and n_nodes.

Referenced by libMesh::Partitioner::set_interface_node_processor_ids_BFS(), libMesh::Partitioner::set_interface_node_processor_ids_linear(), and libMesh::Partitioner::set_interface_node_processor_ids_petscpartitioner().

◆ repartition() [1/2]

|

inherited |

Repartitions the MeshBase into n parts. (Some partitioning algorithms can repartition more efficiently than computing a new partitioning from scratch.) The default behavior is to simply call this->partition(mesh,n).

Definition at line 124 of file partitioner.C.

References libMesh::Partitioner::_do_repartition(), mesh, std::min(), libMesh::Partitioner::partition_unpartitioned_elements(), libMesh::Partitioner::set_node_processor_ids(), libMesh::Partitioner::set_parent_processor_ids(), and libMesh::Partitioner::single_partition().

Referenced by libMesh::Partitioner::repartition().

◆ repartition() [2/2]

|

inherited |

Repartitions the MeshBase into mesh.n_processors() parts. This is required since some partitioning algorithms can repartition more efficiently than computing a new partitioning from scratch.

Definition at line 117 of file partitioner.C.

References mesh, and libMesh::Partitioner::repartition().

◆ set_interface_node_processor_ids_BFS()

|

staticinherited |

Nodes on the partitioning interface is clustered into two groups BFS (Breadth First Search)scheme for per pair of processors

Definition at line 498 of file partitioner.C.

References libMesh::MeshTools::build_nodes_to_elem_map(), libMesh::MeshTools::find_nodal_neighbors(), mesh, and libMesh::Partitioner::processor_pairs_to_interface_nodes().

Referenced by libMesh::Partitioner::set_node_processor_ids().

◆ set_interface_node_processor_ids_linear()

|

staticinherited |

Nodes on the partitioning interface is linearly assigned to each pair of processors

Definition at line 474 of file partitioner.C.

References mesh, and libMesh::Partitioner::processor_pairs_to_interface_nodes().

Referenced by libMesh::Partitioner::set_node_processor_ids().

◆ set_interface_node_processor_ids_petscpartitioner()

|

staticinherited |

Nodes on the partitioning interface is partitioned into two groups using a PETSc partitioner for each pair of processors

Definition at line 575 of file partitioner.C.

References libMesh::MeshTools::build_nodes_to_elem_map(), libMesh::MeshTools::find_nodal_neighbors(), libMesh::libmesh_ignore(), mesh, and libMesh::Partitioner::processor_pairs_to_interface_nodes().

Referenced by libMesh::Partitioner::set_node_processor_ids().

◆ set_node_processor_ids()

|

staticinherited |

This function is called after partitioning to set the processor IDs for the nodes. By definition, a Node's processor ID is the minimum processor ID for all of the elements which share the node.

Definition at line 679 of file partitioner.C.

References libMesh::as_range(), libMesh::Node::choose_processor_id(), libMesh::DofObject::invalid_processor_id, mesh, libMesh::MeshTools::n_elem(), libMesh::on_command_line(), libMesh::DofObject::processor_id(), libMesh::Parallel::pull_parallel_vector_data(), libMesh::Partitioner::set_interface_node_processor_ids_BFS(), libMesh::Partitioner::set_interface_node_processor_ids_linear(), and libMesh::Partitioner::set_interface_node_processor_ids_petscpartitioner().

Referenced by libMesh::MeshRefinement::_refine_elements(), libMesh::UnstructuredMesh::all_first_order(), libMesh::Partitioner::partition(), libMesh::XdrIO::read(), libMesh::Partitioner::repartition(), and libMesh::BoundaryInfo::sync().

◆ set_parent_processor_ids()

|

staticinherited |

This function is called after partitioning to set the processor IDs for the inactive parent elements. A parent's processor ID is the same as its first child.

Definition at line 268 of file partitioner.C.

References libMesh::as_range(), libMesh::Elem::child_ref_range(), libMesh::Partitioner::communication_blocksize, libMesh::DofObject::invalid_processor_id, libMesh::DofObject::invalidate_processor_id(), libMesh::libmesh_ignore(), mesh, std::min(), libMesh::MeshTools::n_elem(), libMesh::Elem::parent(), libMesh::DofObject::processor_id(), and libMesh::Elem::total_family_tree().

Referenced by libMesh::Partitioner::partition(), and libMesh::Partitioner::repartition().

◆ single_partition()

|

protectedinherited |

Trivially "partitions" the mesh for one processor. Simply loops through the elements and assigns all of them to processor 0. Is is provided as a separate function so that derived classes may use it without reimplementing it.

Definition at line 159 of file partitioner.C.

References libMesh::MeshBase::elements_begin(), mesh, and libMesh::Partitioner::single_partition_range().

Referenced by libMesh::SubdomainPartitioner::_do_partition(), libMesh::Partitioner::partition(), and libMesh::Partitioner::repartition().

◆ single_partition_range()

|

protectedinherited |

Slightly generalized version of single_partition which acts on a range of elements defined by the pair of iterators (it, end).

Definition at line 172 of file partitioner.C.

References libMesh::as_range(), and end.

Referenced by libMesh::LinearPartitioner::partition_range(), libMesh::MetisPartitioner::partition_range(), libMesh::MappedSubdomainPartitioner::partition_range(), libMesh::SFCPartitioner::partition_range(), libMesh::CentroidPartitioner::partition_range(), and libMesh::Partitioner::single_partition().

Member Data Documentation

◆ _dual_graph

|

protectedinherited |

A dual graph corresponds to the mesh, and it is typically used in paritioner. A vertex represents an element, and its neighbors are the element neighbors.

Definition at line 288 of file partitioner.h.

Referenced by libMesh::Partitioner::build_graph().

◆ _global_index_by_pid_map

|

protectedinherited |

Maps active element ids into a contiguous range, as needed by parallel partitioner.

Definition at line 272 of file partitioner.h.

Referenced by libMesh::Partitioner::_find_global_index_by_pid_map(), libMesh::Partitioner::assign_partitioning(), and libMesh::Partitioner::build_graph().

◆ _local_id_to_elem

|

protectedinherited |

Definition at line 291 of file partitioner.h.

Referenced by libMesh::Partitioner::build_graph().

◆ _n_active_elem_on_proc

|

protectedinherited |

The number of active elements on each processor.

- Note

- ParMETIS requires that each processor have some active elements; it will abort if any processor passes a nullptr _part array.

Definition at line 281 of file partitioner.h.

Referenced by libMesh::Partitioner::_find_global_index_by_pid_map(), libMesh::Partitioner::assign_partitioning(), and libMesh::Partitioner::build_graph().

◆ _pmetis

|

private |

Pointer to the Parmetis-specific data structures. Lets us avoid including parmetis.h here.

Definition at line 122 of file parmetis_partitioner.h.

◆ _weights

|

protectedinherited |

The weights that might be used for partitioning.

Definition at line 267 of file partitioner.h.

Referenced by libMesh::MetisPartitioner::attach_weights(), and libMesh::MetisPartitioner::partition_range().

◆ communication_blocksize

|

staticprotectedinherited |

The blocksize to use when doing blocked parallel communication. This limits the maximum vector size which can be used in a single communication step.

Definition at line 244 of file partitioner.h.

Referenced by libMesh::Partitioner::set_parent_processor_ids().

The documentation for this class was generated from the following files:

generated by