#include <xdr_io.h>

Public Types | |

| typedef largest_id_type | xdr_id_type |

| typedef uint32_t | old_header_id_type |

| typedef uint64_t | new_header_id_type |

Public Member Functions | |

| XdrIO (MeshBase &, const bool=false) | |

| XdrIO (const MeshBase &, const bool=false) | |

| virtual | ~XdrIO () |

| virtual void | read (const std::string &) override |

| virtual void | write (const std::string &) override |

| bool | binary () const |

| bool & | binary () |

| bool | legacy () const |

| bool & | legacy () |

| bool | write_parallel () const |

| void | set_write_parallel (bool do_parallel=true) |

| void | set_auto_parallel () |

| const std::string & | version () const |

| std::string & | version () |

| const std::string & | boundary_condition_file_name () const |

| std::string & | boundary_condition_file_name () |

| const std::string & | partition_map_file_name () const |

| std::string & | partition_map_file_name () |

| const std::string & | subdomain_map_file_name () const |

| std::string & | subdomain_map_file_name () |

| const std::string & | polynomial_level_file_name () const |

| std::string & | polynomial_level_file_name () |

| bool | version_at_least_0_9_2 () const |

| bool | version_at_least_0_9_6 () const |

| bool | version_at_least_1_1_0 () const |

| bool | version_at_least_1_3_0 () const |

| virtual void | write_equation_systems (const std::string &, const EquationSystems &, const std::set< std::string > *system_names=nullptr) |

| virtual void | write_discontinuous_equation_systems (const std::string &, const EquationSystems &, const std::set< std::string > *system_names=nullptr) |

| virtual void | write_nodal_data (const std::string &, const std::vector< Number > &, const std::vector< std::string > &) |

| virtual void | write_nodal_data (const std::string &, const NumericVector< Number > &, const std::vector< std::string > &) |

| virtual void | write_nodal_data_discontinuous (const std::string &, const std::vector< Number > &, const std::vector< std::string > &) |

| unsigned int & | ascii_precision () |

| const Parallel::Communicator & | comm () const |

| processor_id_type | n_processors () const |

| processor_id_type | processor_id () const |

Protected Member Functions | |

| MeshBase & | mesh () |

| void | set_n_partitions (unsigned int n_parts) |

| void | skip_comment_lines (std::istream &in, const char comment_start) |

| const MeshBase & | mesh () const |

Protected Attributes | |

| std::vector< bool > | elems_of_dimension |

| const bool | _is_parallel_format |

| const bool | _serial_only_needed_on_proc_0 |

| const Parallel::Communicator & | _communicator |

Private Member Functions | |

| void | write_serialized_subdomain_names (Xdr &io) const |

| void | write_serialized_connectivity (Xdr &io, const dof_id_type n_elem) const |

| void | write_serialized_nodes (Xdr &io, const dof_id_type n_nodes) const |

| void | write_serialized_bcs_helper (Xdr &io, const new_header_id_type n_side_bcs, const std::string bc_type) const |

| void | write_serialized_side_bcs (Xdr &io, const new_header_id_type n_side_bcs) const |

| void | write_serialized_edge_bcs (Xdr &io, const new_header_id_type n_edge_bcs) const |

| void | write_serialized_shellface_bcs (Xdr &io, const new_header_id_type n_shellface_bcs) const |

| void | write_serialized_nodesets (Xdr &io, const new_header_id_type n_nodesets) const |

| void | write_serialized_bc_names (Xdr &io, const BoundaryInfo &info, bool is_sideset) const |

| template<typename T > | |

| void | read_header (Xdr &io, std::vector< T > &meta_data) |

| void | read_serialized_subdomain_names (Xdr &io) |

| template<typename T > | |

| void | read_serialized_connectivity (Xdr &io, const dof_id_type n_elem, std::vector< new_header_id_type > &sizes, T) |

| void | read_serialized_nodes (Xdr &io, const dof_id_type n_nodes) |

| template<typename T > | |

| void | read_serialized_bcs_helper (Xdr &io, T, const std::string bc_type) |

| template<typename T > | |

| void | read_serialized_side_bcs (Xdr &io, T) |

| template<typename T > | |

| void | read_serialized_edge_bcs (Xdr &io, T) |

| template<typename T > | |

| void | read_serialized_shellface_bcs (Xdr &io, T) |

| template<typename T > | |

| void | read_serialized_nodesets (Xdr &io, T) |

| void | read_serialized_bc_names (Xdr &io, BoundaryInfo &info, bool is_sideset) |

| void | pack_element (std::vector< xdr_id_type > &conn, const Elem *elem, const dof_id_type parent_id=DofObject::invalid_id, const dof_id_type parent_pid=DofObject::invalid_id) const |

Private Attributes | |

| bool | _binary |

| bool | _legacy |

| bool | _write_serial |

| bool | _write_parallel |

| bool | _write_unique_id |

| unsigned int | _field_width |

| std::string | _version |

| std::string | _bc_file_name |

| std::string | _partition_map_file |

| std::string | _subdomain_map_file |

| std::string | _p_level_file |

Static Private Attributes | |

| static const std::size_t | io_blksize = 128000 |

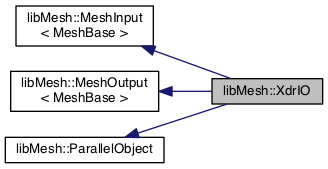

Detailed Description

MeshIO class used for writing XDR (eXternal Data Representation) and XDA mesh files. XDR/XDA is libmesh's internal data format, and allows the full refinement tree structure of the mesh to be written to file.

- Date

- 2004

Member Typedef Documentation

◆ new_header_id_type

| typedef uint64_t libMesh::XdrIO::new_header_id_type |

◆ old_header_id_type

| typedef uint32_t libMesh::XdrIO::old_header_id_type |

◆ xdr_id_type

Constructor & Destructor Documentation

◆ XdrIO() [1/2]

|

explicit |

Constructor. Takes a writable reference to a mesh object. This is the constructor required to read a mesh. The optional parameter binary can be used to switch between ASCII (false, the default) or binary (true) files.

Definition at line 129 of file xdr_io.C.

◆ XdrIO() [2/2]

|

explicit |

Constructor. Takes a reference to a constant mesh object. This constructor will only allow us to write the mesh. The optional parameter binary can be used to switch between ASCII (false, the default) or binary (true) files.

Definition at line 153 of file xdr_io.C.

◆ ~XdrIO()

Member Function Documentation

◆ ascii_precision()

|

inlineinherited |

Return/set the precision to use when writing ASCII files.

By default we use numeric_limits<Real>::digits10 + 2, which should be enough to write out to ASCII and get the exact same Real back when reading in.

Definition at line 244 of file mesh_output.h.

Referenced by libMesh::TecplotIO::write_ascii(), libMesh::GMVIO::write_ascii_new_impl(), and libMesh::GMVIO::write_ascii_old_impl().

◆ binary() [1/2]

|

inline |

◆ binary() [2/2]

|

inline |

◆ boundary_condition_file_name() [1/2]

|

inline |

Get/Set the boundary condition file name.

Definition at line 146 of file xdr_io.h.

References _bc_file_name.

Referenced by read_header(), read_serialized_bcs_helper(), read_serialized_nodesets(), and write().

◆ boundary_condition_file_name() [2/2]

|

inline |

◆ comm()

|

inlineinherited |

- Returns

- A reference to the

Parallel::Communicatorobject used by this mesh.

Definition at line 89 of file parallel_object.h.

References libMesh::ParallelObject::_communicator.

Referenced by libMesh::__libmesh_petsc_diff_solver_jacobian(), libMesh::__libmesh_petsc_diff_solver_monitor(), libMesh::__libmesh_petsc_diff_solver_residual(), libMesh::__libmesh_tao_equality_constraints(), libMesh::__libmesh_tao_equality_constraints_jacobian(), libMesh::__libmesh_tao_gradient(), libMesh::__libmesh_tao_hessian(), libMesh::__libmesh_tao_inequality_constraints(), libMesh::__libmesh_tao_inequality_constraints_jacobian(), libMesh::__libmesh_tao_objective(), libMesh::MeshRefinement::_coarsen_elements(), libMesh::ExactSolution::_compute_error(), libMesh::UniformRefinementEstimator::_estimate_error(), libMesh::BoundaryInfo::_find_id_maps(), libMesh::SlepcEigenSolver< T >::_petsc_shell_matrix_get_diagonal(), libMesh::PetscLinearSolver< T >::_petsc_shell_matrix_get_diagonal(), libMesh::SlepcEigenSolver< T >::_petsc_shell_matrix_mult(), libMesh::PetscLinearSolver< T >::_petsc_shell_matrix_mult(), libMesh::PetscLinearSolver< T >::_petsc_shell_matrix_mult_add(), libMesh::EquationSystems::_read_impl(), libMesh::MeshRefinement::_refine_elements(), libMesh::MeshRefinement::_smooth_flags(), libMesh::PetscDMWrapper::add_dofs_helper(), libMesh::PetscDMWrapper::add_dofs_to_section(), libMesh::ImplicitSystem::add_matrix(), libMesh::System::add_vector(), libMesh::UnstructuredMesh::all_second_order(), libMesh::MeshTools::Modification::all_tri(), libMesh::LaplaceMeshSmoother::allgather_graph(), libMesh::FEMSystem::assemble_qoi(), libMesh::MeshCommunication::assign_global_indices(), libMesh::DofMap::attach_matrix(), libMesh::MeshTools::Generation::build_extrusion(), libMesh::BoundaryInfo::build_node_list_from_side_list(), libMesh::EquationSystems::build_parallel_elemental_solution_vector(), libMesh::EquationSystems::build_parallel_solution_vector(), libMesh::PetscDMWrapper::build_section(), libMesh::PetscDMWrapper::build_sf(), libMesh::MeshBase::cache_elem_dims(), libMesh::System::calculate_norm(), libMesh::DofMap::check_dirichlet_bcid_consistency(), libMesh::PetscDMWrapper::check_section_n_dofs(), libMesh::Nemesis_IO_Helper::compute_num_global_elem_blocks(), libMesh::Nemesis_IO_Helper::compute_num_global_nodesets(), libMesh::Nemesis_IO_Helper::compute_num_global_sidesets(), libMesh::Problem_Interface::computeF(), libMesh::Problem_Interface::computeJacobian(), libMesh::Problem_Interface::computePreconditioner(), libMesh::ExodusII_IO::copy_elemental_solution(), libMesh::MeshTools::correct_node_proc_ids(), libMesh::MeshTools::create_bounding_box(), libMesh::MeshTools::create_nodal_bounding_box(), libMesh::MeshRefinement::create_parent_error_vector(), libMesh::MeshTools::create_processor_bounding_box(), libMesh::MeshTools::create_subdomain_bounding_box(), libMesh::MeshCommunication::delete_remote_elements(), libMesh::DofMap::distribute_dofs(), DMlibMeshFunction(), DMlibMeshJacobian(), DMlibMeshSetSystem_libMesh(), DMVariableBounds_libMesh(), libMesh::MeshRefinement::eliminate_unrefined_patches(), libMesh::EpetraVector< T >::EpetraVector(), libMesh::WeightedPatchRecoveryErrorEstimator::estimate_error(), libMesh::PatchRecoveryErrorEstimator::estimate_error(), libMesh::JumpErrorEstimator::estimate_error(), libMesh::AdjointRefinementEstimator::estimate_error(), libMesh::ExactErrorEstimator::estimate_error(), libMesh::MeshRefinement::flag_elements_by_elem_fraction(), libMesh::MeshRefinement::flag_elements_by_error_fraction(), libMesh::MeshRefinement::flag_elements_by_nelem_target(), libMesh::CondensedEigenSystem::get_eigenpair(), libMesh::DofMap::get_info(), libMesh::ImplicitSystem::get_linear_solver(), libMesh::LocationMap< T >::init(), libMesh::TimeSolver::init(), libMesh::SystemSubsetBySubdomain::init(), libMesh::PetscDMWrapper::init_and_attach_petscdm(), libMesh::EigenSystem::init_data(), libMesh::EigenSystem::init_matrices(), libMesh::OptimizationSystem::initialize_equality_constraints_storage(), libMesh::OptimizationSystem::initialize_inequality_constraints_storage(), libMesh::MeshTools::libmesh_assert_consistent_distributed(), libMesh::MeshTools::libmesh_assert_consistent_distributed_nodes(), libMesh::MeshTools::libmesh_assert_contiguous_dof_ids(), libMesh::MeshTools::libmesh_assert_parallel_consistent_new_node_procids(), libMesh::MeshTools::libmesh_assert_parallel_consistent_procids< Elem >(), libMesh::MeshTools::libmesh_assert_parallel_consistent_procids< Node >(), libMesh::MeshTools::libmesh_assert_topology_consistent_procids< Node >(), libMesh::MeshTools::libmesh_assert_valid_boundary_ids(), libMesh::MeshTools::libmesh_assert_valid_dof_ids(), libMesh::MeshTools::libmesh_assert_valid_neighbors(), libMesh::DistributedMesh::libmesh_assert_valid_parallel_flags(), libMesh::DistributedMesh::libmesh_assert_valid_parallel_object_ids(), libMesh::DistributedMesh::libmesh_assert_valid_parallel_p_levels(), libMesh::MeshTools::libmesh_assert_valid_refinement_flags(), libMesh::MeshTools::libmesh_assert_valid_unique_ids(), libMesh::libmesh_petsc_snes_fd_residual(), libMesh::libmesh_petsc_snes_jacobian(), libMesh::libmesh_petsc_snes_mffd_residual(), libMesh::libmesh_petsc_snes_postcheck(), libMesh::libmesh_petsc_snes_residual(), libMesh::libmesh_petsc_snes_residual_helper(), libMesh::MeshRefinement::limit_level_mismatch_at_edge(), libMesh::MeshRefinement::limit_level_mismatch_at_node(), libMesh::MeshRefinement::limit_overrefined_boundary(), libMesh::MeshRefinement::limit_underrefined_boundary(), libMesh::MeshRefinement::make_coarsening_compatible(), libMesh::MeshCommunication::make_elems_parallel_consistent(), libMesh::MeshRefinement::make_flags_parallel_consistent(), libMesh::MeshCommunication::make_new_node_proc_ids_parallel_consistent(), libMesh::MeshCommunication::make_new_nodes_parallel_consistent(), libMesh::MeshCommunication::make_node_ids_parallel_consistent(), libMesh::MeshCommunication::make_node_proc_ids_parallel_consistent(), libMesh::MeshCommunication::make_node_unique_ids_parallel_consistent(), libMesh::MeshCommunication::make_nodes_parallel_consistent(), libMesh::MeshCommunication::make_p_levels_parallel_consistent(), libMesh::MeshRefinement::make_refinement_compatible(), libMesh::FEMSystem::mesh_position_set(), libMesh::DistributedMesh::n_active_elem(), libMesh::MeshTools::n_active_levels(), libMesh::BoundaryInfo::n_boundary_conds(), libMesh::BoundaryInfo::n_edge_conds(), libMesh::CondensedEigenSystem::n_global_non_condensed_dofs(), libMesh::MeshTools::n_levels(), libMesh::BoundaryInfo::n_nodeset_conds(), libMesh::MeshTools::n_p_levels(), libMesh::BoundaryInfo::n_shellface_conds(), libMesh::DistributedMesh::parallel_max_elem_id(), libMesh::DistributedMesh::parallel_max_node_id(), libMesh::ReplicatedMesh::parallel_max_unique_id(), libMesh::DistributedMesh::parallel_max_unique_id(), libMesh::DistributedMesh::parallel_n_elem(), libMesh::DistributedMesh::parallel_n_nodes(), libMesh::SparsityPattern::Build::parallel_sync(), libMesh::MeshTools::paranoid_n_levels(), libMesh::petsc_auto_fieldsplit(), libMesh::System::point_gradient(), libMesh::System::point_hessian(), libMesh::System::point_value(), libMesh::MeshBase::prepare_for_use(), libMesh::Nemesis_IO::read(), read(), libMesh::CheckpointIO::read_header(), read_header(), libMesh::System::read_header(), libMesh::System::read_legacy_data(), libMesh::System::read_SCALAR_dofs(), read_serialized_bc_names(), read_serialized_bcs_helper(), libMesh::System::read_serialized_blocked_dof_objects(), read_serialized_connectivity(), read_serialized_nodes(), read_serialized_nodesets(), read_serialized_subdomain_names(), libMesh::System::read_serialized_vector(), libMesh::MeshBase::recalculate_n_partitions(), libMesh::MeshRefinement::refine_and_coarsen_elements(), libMesh::DistributedMesh::renumber_dof_objects(), libMesh::CheckpointIO::select_split_config(), libMesh::DofMap::set_nonlocal_dof_objects(), libMesh::PetscDMWrapper::set_point_range_in_section(), libMesh::PetscDiffSolver::setup_petsc_data(), libMesh::LaplaceMeshSmoother::smooth(), libMesh::split_mesh(), libMesh::MeshBase::subdomain_ids(), libMesh::BoundaryInfo::sync(), libMesh::MeshRefinement::test_level_one(), libMesh::MeshRefinement::test_unflagged(), libMesh::MeshTools::total_weight(), libMesh::MeshRefinement::uniformly_coarsen(), libMesh::NameBasedIO::write(), write(), libMesh::System::write_SCALAR_dofs(), write_serialized_bcs_helper(), libMesh::System::write_serialized_blocked_dof_objects(), write_serialized_connectivity(), write_serialized_nodes(), and write_serialized_nodesets().

◆ legacy() [1/2]

|

inline |

◆ legacy() [2/2]

|

inline |

◆ mesh() [1/2]

|

inlineprotectedinherited |

- Returns

- The object as a writable reference.

Definition at line 169 of file mesh_input.h.

Referenced by libMesh::GMVIO::_read_one_cell(), libMesh::VTKIO::cells_to_vtk(), libMesh::TetGenIO::element_in(), libMesh::UNVIO::elements_in(), libMesh::UNVIO::elements_out(), libMesh::UNVIO::groups_in(), libMesh::TetGenIO::node_in(), libMesh::UNVIO::nodes_in(), libMesh::UNVIO::nodes_out(), libMesh::VTKIO::nodes_to_vtk(), libMesh::Nemesis_IO::prepare_to_write_nodal_data(), libMesh::Nemesis_IO::read(), libMesh::ExodusII_IO::read(), libMesh::GMVIO::read(), read(), libMesh::CheckpointIO::read(), libMesh::VTKIO::read(), libMesh::CheckpointIO::read_bcs(), libMesh::CheckpointIO::read_connectivity(), libMesh::CheckpointIO::read_header(), read_header(), libMesh::UCDIO::read_implementation(), libMesh::UNVIO::read_implementation(), libMesh::GmshIO::read_mesh(), libMesh::CheckpointIO::read_nodes(), libMesh::CheckpointIO::read_nodesets(), libMesh::CheckpointIO::read_remote_elem(), read_serialized_bcs_helper(), read_serialized_connectivity(), read_serialized_nodes(), read_serialized_nodesets(), read_serialized_subdomain_names(), libMesh::OFFIO::read_stream(), libMesh::MatlabIO::read_stream(), libMesh::CheckpointIO::read_subdomain_names(), libMesh::TetGenIO::write(), libMesh::Nemesis_IO::write(), libMesh::ExodusII_IO::write(), write(), libMesh::CheckpointIO::write(), libMesh::GMVIO::write_ascii_new_impl(), libMesh::GMVIO::write_ascii_old_impl(), libMesh::GMVIO::write_binary(), libMesh::GMVIO::write_discontinuous_gmv(), libMesh::Nemesis_IO::write_element_data(), libMesh::ExodusII_IO::write_element_data(), libMesh::UCDIO::write_header(), libMesh::UCDIO::write_implementation(), libMesh::UCDIO::write_interior_elems(), libMesh::GmshIO::write_mesh(), libMesh::VTKIO::write_nodal_data(), libMesh::UCDIO::write_nodal_data(), libMesh::ExodusII_IO::write_nodal_data(), libMesh::ExodusII_IO::write_nodal_data_common(), libMesh::ExodusII_IO::write_nodal_data_discontinuous(), libMesh::UCDIO::write_nodes(), libMesh::CheckpointIO::write_nodesets(), write_parallel(), libMesh::GmshIO::write_post(), write_serialized_bcs_helper(), write_serialized_connectivity(), write_serialized_nodes(), write_serialized_nodesets(), write_serialized_subdomain_names(), libMesh::UCDIO::write_soln(), and libMesh::CheckpointIO::write_subdomain_names().

◆ mesh() [2/2]

|

inlineprotectedinherited |

- Returns

- The object as a read-only reference.

Definition at line 234 of file mesh_output.h.

Referenced by libMesh::FroIO::write(), libMesh::TecplotIO::write(), libMesh::MEDITIO::write(), libMesh::PostscriptIO::write(), libMesh::EnsightIO::write(), libMesh::TecplotIO::write_ascii(), libMesh::TecplotIO::write_binary(), libMesh::TecplotIO::write_nodal_data(), libMesh::MEDITIO::write_nodal_data(), and libMesh::GnuPlotIO::write_solution().

◆ n_processors()

|

inlineinherited |

- Returns

- The number of processors in the group.

Definition at line 95 of file parallel_object.h.

References libMesh::ParallelObject::_communicator, and libMesh::Parallel::Communicator::size().

Referenced by libMesh::BoundaryInfo::_find_id_maps(), libMesh::PetscDMWrapper::add_dofs_to_section(), libMesh::DistributedMesh::add_elem(), libMesh::DistributedMesh::add_node(), libMesh::LaplaceMeshSmoother::allgather_graph(), libMesh::FEMSystem::assembly(), libMesh::AztecLinearSolver< T >::AztecLinearSolver(), libMesh::BoundaryInfo::build_node_list_from_side_list(), libMesh::EquationSystems::build_parallel_elemental_solution_vector(), libMesh::DistributedMesh::clear(), libMesh::Nemesis_IO_Helper::compute_border_node_ids(), libMesh::Nemesis_IO_Helper::construct_nemesis_filename(), libMesh::UnstructuredMesh::create_pid_mesh(), libMesh::MeshTools::create_processor_bounding_box(), libMesh::DofMap::distribute_dofs(), libMesh::DofMap::distribute_local_dofs_node_major(), libMesh::DofMap::distribute_local_dofs_var_major(), libMesh::EnsightIO::EnsightIO(), libMesh::MeshBase::get_info(), libMesh::SystemSubsetBySubdomain::init(), libMesh::PetscDMWrapper::init_and_attach_petscdm(), libMesh::Nemesis_IO_Helper::initialize(), libMesh::DistributedMesh::insert_elem(), libMesh::MeshTools::libmesh_assert_contiguous_dof_ids(), libMesh::MeshTools::libmesh_assert_parallel_consistent_new_node_procids(), libMesh::MeshTools::libmesh_assert_parallel_consistent_procids< Elem >(), libMesh::MeshTools::libmesh_assert_parallel_consistent_procids< Node >(), libMesh::MeshTools::libmesh_assert_topology_consistent_procids< Node >(), libMesh::MeshTools::libmesh_assert_valid_boundary_ids(), libMesh::MeshTools::libmesh_assert_valid_dof_ids(), libMesh::MeshTools::libmesh_assert_valid_neighbors(), libMesh::MeshTools::libmesh_assert_valid_refinement_flags(), libMesh::DofMap::local_variable_indices(), libMesh::MeshRefinement::make_coarsening_compatible(), libMesh::MeshBase::n_active_elem_on_proc(), libMesh::MeshBase::n_elem_on_proc(), libMesh::MeshBase::n_nodes_on_proc(), libMesh::MeshBase::partition(), libMesh::PetscLinearSolver< T >::PetscLinearSolver(), libMesh::System::point_gradient(), libMesh::System::point_hessian(), libMesh::System::point_value(), libMesh::NameBasedIO::read(), libMesh::Nemesis_IO::read(), libMesh::CheckpointIO::read(), libMesh::CheckpointIO::read_connectivity(), read_header(), libMesh::CheckpointIO::read_nodes(), libMesh::System::read_parallel_data(), libMesh::System::read_SCALAR_dofs(), libMesh::System::read_serialized_blocked_dof_objects(), libMesh::System::read_serialized_vector(), libMesh::DistributedMesh::renumber_dof_objects(), libMesh::DofMap::set_nonlocal_dof_objects(), libMesh::PetscDMWrapper::set_point_range_in_section(), libMesh::MeshRefinement::uniformly_coarsen(), libMesh::DistributedMesh::update_parallel_id_counts(), libMesh::GMVIO::write_binary(), libMesh::GMVIO::write_discontinuous_gmv(), libMesh::System::write_parallel_data(), libMesh::System::write_SCALAR_dofs(), write_serialized_bcs_helper(), libMesh::System::write_serialized_blocked_dof_objects(), write_serialized_connectivity(), write_serialized_nodes(), and write_serialized_nodesets().

◆ pack_element()

|

private |

Pack an element into a transfer buffer for parallel communication.

Definition at line 2120 of file xdr_io.C.

References libMesh::DofObject::invalid_id, libMesh::Elem::n_nodes(), libMesh::Elem::node_id(), libMesh::Elem::node_index_range(), libMesh::Elem::p_level(), libMesh::DofObject::processor_id(), libMesh::Elem::subdomain_id(), libMesh::Elem::type(), libMesh::Elem::type_to_n_nodes_map, and libMesh::DofObject::unique_id().

Referenced by write_serialized_connectivity().

◆ partition_map_file_name() [1/2]

|

inline |

Get/Set the partitioning file name.

Definition at line 152 of file xdr_io.h.

References _partition_map_file.

Referenced by read_header(), read_serialized_connectivity(), write(), and write_serialized_connectivity().

◆ partition_map_file_name() [2/2]

|

inline |

◆ polynomial_level_file_name() [1/2]

|

inline |

Get/Set the polynomial degree file name.

Definition at line 164 of file xdr_io.h.

References _p_level_file.

Referenced by read_header(), read_serialized_connectivity(), write(), and write_serialized_connectivity().

◆ polynomial_level_file_name() [2/2]

|

inline |

◆ processor_id()

|

inlineinherited |

- Returns

- The rank of this processor in the group.

Definition at line 101 of file parallel_object.h.

References libMesh::ParallelObject::_communicator, and libMesh::Parallel::Communicator::rank().

Referenced by libMesh::BoundaryInfo::_find_id_maps(), libMesh::EquationSystems::_read_impl(), libMesh::PetscDMWrapper::add_dofs_to_section(), libMesh::DistributedMesh::add_elem(), libMesh::BoundaryInfo::add_elements(), libMesh::DofMap::add_neighbors_to_send_list(), libMesh::DistributedMesh::add_node(), libMesh::UnstructuredMesh::all_second_order(), libMesh::MeshTools::Modification::all_tri(), libMesh::FEMSystem::assembly(), libMesh::EquationSystems::build_discontinuous_solution_vector(), libMesh::Nemesis_IO_Helper::build_element_and_node_maps(), libMesh::InfElemBuilder::build_inf_elem(), libMesh::BoundaryInfo::build_node_list_from_side_list(), libMesh::EquationSystems::build_parallel_elemental_solution_vector(), libMesh::DistributedMesh::clear(), libMesh::ExodusII_IO_Helper::close(), libMesh::Nemesis_IO_Helper::compute_border_node_ids(), libMesh::Nemesis_IO_Helper::compute_communication_map_parameters(), libMesh::Nemesis_IO_Helper::compute_internal_and_border_elems_and_internal_nodes(), libMesh::Nemesis_IO_Helper::compute_node_communication_maps(), libMesh::Nemesis_IO_Helper::compute_num_global_elem_blocks(), libMesh::Nemesis_IO_Helper::compute_num_global_nodesets(), libMesh::Nemesis_IO_Helper::compute_num_global_sidesets(), libMesh::Nemesis_IO_Helper::construct_nemesis_filename(), libMesh::MeshTools::correct_node_proc_ids(), libMesh::ExodusII_IO_Helper::create(), libMesh::DistributedMesh::delete_elem(), libMesh::DistributedMesh::delete_node(), libMesh::MeshCommunication::delete_remote_elements(), libMesh::DofMap::distribute_dofs(), libMesh::DofMap::distribute_local_dofs_node_major(), libMesh::DofMap::distribute_local_dofs_var_major(), libMesh::DistributedMesh::DistributedMesh(), libMesh::DofMap::end_dof(), libMesh::DofMap::end_old_dof(), libMesh::EnsightIO::EnsightIO(), libMesh::MeshFunction::find_element(), libMesh::MeshFunction::find_elements(), libMesh::UnstructuredMesh::find_neighbors(), libMesh::DofMap::first_dof(), libMesh::DofMap::first_old_dof(), libMesh::Nemesis_IO_Helper::get_cmap_params(), libMesh::Nemesis_IO_Helper::get_eb_info_global(), libMesh::Nemesis_IO_Helper::get_elem_cmap(), libMesh::Nemesis_IO_Helper::get_elem_map(), libMesh::MeshBase::get_info(), libMesh::DofMap::get_info(), libMesh::Nemesis_IO_Helper::get_init_global(), libMesh::Nemesis_IO_Helper::get_init_info(), libMesh::Nemesis_IO_Helper::get_loadbal_param(), libMesh::Nemesis_IO_Helper::get_node_cmap(), libMesh::Nemesis_IO_Helper::get_node_map(), libMesh::Nemesis_IO_Helper::get_ns_param_global(), libMesh::Nemesis_IO_Helper::get_ss_param_global(), libMesh::SparsityPattern::Build::handle_vi_vj(), libMesh::SystemSubsetBySubdomain::init(), libMesh::ExodusII_IO_Helper::initialize(), libMesh::ExodusII_IO_Helper::initialize_element_variables(), libMesh::ExodusII_IO_Helper::initialize_global_variables(), libMesh::ExodusII_IO_Helper::initialize_nodal_variables(), libMesh::DistributedMesh::insert_elem(), libMesh::DofMap::is_evaluable(), libMesh::SparsityPattern::Build::join(), libMesh::DofMap::last_dof(), libMesh::MeshTools::libmesh_assert_consistent_distributed(), libMesh::MeshTools::libmesh_assert_consistent_distributed_nodes(), libMesh::MeshTools::libmesh_assert_contiguous_dof_ids(), libMesh::MeshTools::libmesh_assert_parallel_consistent_procids< Elem >(), libMesh::MeshTools::libmesh_assert_valid_neighbors(), libMesh::DistributedMesh::libmesh_assert_valid_parallel_object_ids(), libMesh::DofMap::local_variable_indices(), libMesh::MeshRefinement::make_coarsening_compatible(), libMesh::MeshBase::n_active_local_elem(), libMesh::BoundaryInfo::n_boundary_conds(), libMesh::BoundaryInfo::n_edge_conds(), libMesh::DofMap::n_local_dofs(), libMesh::System::n_local_dofs(), libMesh::MeshBase::n_local_elem(), libMesh::MeshBase::n_local_nodes(), libMesh::BoundaryInfo::n_nodeset_conds(), libMesh::BoundaryInfo::n_shellface_conds(), libMesh::SparsityPattern::Build::operator()(), libMesh::DistributedMesh::own_node(), libMesh::System::point_gradient(), libMesh::System::point_hessian(), libMesh::System::point_value(), libMesh::Nemesis_IO_Helper::put_cmap_params(), libMesh::Nemesis_IO_Helper::put_elem_cmap(), libMesh::Nemesis_IO_Helper::put_elem_map(), libMesh::Nemesis_IO_Helper::put_loadbal_param(), libMesh::Nemesis_IO_Helper::put_node_cmap(), libMesh::Nemesis_IO_Helper::put_node_map(), libMesh::NameBasedIO::read(), libMesh::Nemesis_IO::read(), read(), libMesh::CheckpointIO::read(), libMesh::ExodusII_IO_Helper::read_elem_num_map(), libMesh::ExodusII_IO_Helper::read_global_values(), libMesh::CheckpointIO::read_header(), read_header(), libMesh::System::read_header(), libMesh::System::read_legacy_data(), libMesh::ExodusII_IO_Helper::read_node_num_map(), libMesh::System::read_parallel_data(), libMesh::System::read_SCALAR_dofs(), read_serialized_bc_names(), read_serialized_bcs_helper(), libMesh::System::read_serialized_blocked_dof_objects(), read_serialized_connectivity(), libMesh::System::read_serialized_data(), read_serialized_nodes(), read_serialized_nodesets(), read_serialized_subdomain_names(), libMesh::System::read_serialized_vector(), libMesh::System::read_serialized_vectors(), libMesh::DistributedMesh::renumber_dof_objects(), libMesh::CheckpointIO::select_split_config(), libMesh::DofMap::set_nonlocal_dof_objects(), libMesh::PetscDMWrapper::set_point_range_in_section(), libMesh::LaplaceMeshSmoother::smooth(), libMesh::MeshTools::total_weight(), libMesh::MeshRefinement::uniformly_coarsen(), libMesh::Parallel::Packing< T >::unpack(), libMesh::DistributedMesh::update_parallel_id_counts(), libMesh::NameBasedIO::write(), write(), libMesh::CheckpointIO::write(), libMesh::EquationSystems::write(), libMesh::GMVIO::write_discontinuous_gmv(), libMesh::ExodusII_IO::write_element_data(), libMesh::ExodusII_IO_Helper::write_element_values(), libMesh::ExodusII_IO_Helper::write_elements(), libMesh::ExodusII_IO::write_global_data(), libMesh::ExodusII_IO_Helper::write_global_values(), libMesh::System::write_header(), libMesh::ExodusII_IO::write_information_records(), libMesh::ExodusII_IO_Helper::write_information_records(), libMesh::ExodusII_IO_Helper::write_nodal_coordinates(), libMesh::UCDIO::write_nodal_data(), libMesh::ExodusII_IO::write_nodal_data(), libMesh::ExodusII_IO::write_nodal_data_discontinuous(), libMesh::ExodusII_IO_Helper::write_nodal_values(), libMesh::Nemesis_IO_Helper::write_nodesets(), libMesh::ExodusII_IO_Helper::write_nodesets(), libMesh::System::write_parallel_data(), libMesh::System::write_SCALAR_dofs(), write_serialized_bc_names(), write_serialized_bcs_helper(), libMesh::System::write_serialized_blocked_dof_objects(), write_serialized_connectivity(), libMesh::System::write_serialized_data(), write_serialized_nodes(), write_serialized_nodesets(), write_serialized_subdomain_names(), libMesh::System::write_serialized_vector(), libMesh::System::write_serialized_vectors(), libMesh::Nemesis_IO_Helper::write_sidesets(), libMesh::ExodusII_IO_Helper::write_sidesets(), libMesh::ExodusII_IO::write_timestep(), libMesh::ExodusII_IO_Helper::write_timestep(), and libMesh::ExodusII_IO::write_timestep_discontinuous().

◆ read()

|

overridevirtual |

This method implements reading a mesh from a specified file.

We are future proofing the layout of this file by adding in size information for all stored types. TODO: All types are stored as the same size. Use the size information to pack things efficiently. For now we will assume that "type size" is how the entire file will be encoded.

Implements libMesh::MeshInput< MeshBase >.

Definition at line 1250 of file xdr_io.C.

References _field_width, binary(), libMesh::Parallel::Communicator::broadcast(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::DECODE, legacy(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshInput< MT >::mesh(), libMesh::MeshTools::n_elem(), n_nodes, libMesh::ParallelObject::processor_id(), libMesh::READ, read_header(), read_serialized_connectivity(), read_serialized_edge_bcs(), read_serialized_nodes(), read_serialized_nodesets(), read_serialized_shellface_bcs(), read_serialized_side_bcs(), read_serialized_subdomain_names(), libMesh::Partitioner::set_node_processor_ids(), value, version(), version_at_least_0_9_2(), version_at_least_1_1_0(), and version_at_least_1_3_0().

Referenced by libMesh::NameBasedIO::read().

◆ read_header()

|

private |

Read header information - templated to handle old (4-byte) or new (8-byte) header id types.

We are future proofing the layout of this file by adding in size information for all stored types. TODO: All types are stored as the same size. Use the size information to pack things efficiently. For now we will assume that "type size" is how the entire file will be encoded.

Definition at line 1380 of file xdr_io.C.

References _field_width, boundary_condition_file_name(), libMesh::Parallel::Communicator::broadcast(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::MeshInput< MT >::mesh(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshTools::n_elem(), n_nodes, libMesh::ParallelObject::n_processors(), partition_map_file_name(), polynomial_level_file_name(), libMesh::ParallelObject::processor_id(), libMesh::MeshBase::reserve_elem(), libMesh::MeshBase::reserve_nodes(), libMesh::MeshInput< MeshBase >::set_n_partitions(), subdomain_map_file_name(), and version_at_least_0_9_2().

Referenced by read().

◆ read_serialized_bc_names()

|

private |

Read boundary names information (sideset and nodeset) - NEW in 0.9.2 format

Definition at line 2061 of file xdr_io.C.

References libMesh::Parallel::Communicator::broadcast(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::ParallelObject::processor_id(), libMesh::BoundaryInfo::set_nodeset_name_map(), libMesh::BoundaryInfo::set_sideset_name_map(), version_at_least_0_9_2(), and version_at_least_1_3_0().

Referenced by read_serialized_bcs_helper(), and read_serialized_nodesets().

◆ read_serialized_bcs_helper()

|

private |

Helper function used in read_serialized_side_bcs, read_serialized_edge_bcs, and read_serialized_shellface_bcs.

Definition at line 1841 of file xdr_io.C.

References libMesh::BoundaryInfo::add_edge(), libMesh::BoundaryInfo::add_shellface(), libMesh::BoundaryInfo::add_side(), libMesh::as_range(), boundary_condition_file_name(), libMesh::Parallel::Communicator::broadcast(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), data, libMesh::Xdr::data_stream(), libMesh::MeshBase::get_boundary_info(), libMesh::MeshTools::Generation::Private::idx(), io_blksize, libMesh::MeshBase::level_elements_begin(), libMesh::MeshBase::level_elements_end(), libMesh::MeshInput< MT >::mesh(), libMesh::MeshInput< MeshBase >::mesh(), std::min(), libMesh::ParallelObject::processor_id(), read_serialized_bc_names(), libMesh::Xdr::reading(), and version_at_least_1_3_0().

Referenced by read_serialized_edge_bcs(), read_serialized_shellface_bcs(), and read_serialized_side_bcs().

◆ read_serialized_connectivity()

|

private |

Read the connectivity for a parallel, distributed mesh

Definition at line 1504 of file xdr_io.C.

References libMesh::Elem::add_child(), libMesh::MeshBase::add_elem(), libMesh::MeshBase::add_point(), libMesh::Parallel::Communicator::broadcast(), libMesh::Elem::build(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::Xdr::data_stream(), libMesh::Elem::dim(), libMesh::MeshBase::elem_ptr(), libMesh::MeshInput< MeshBase >::elems_of_dimension, libMesh::Elem::hack_p_level(), libMesh::Elem::INACTIVE, libMesh::DofObject::invalid_id, io_blksize, libMesh::Elem::JUST_REFINED, libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshInput< MT >::mesh(), libMesh::MeshBase::mesh_dimension(), std::min(), libMesh::MeshTools::n_elem(), libMesh::Elem::n_nodes(), partition_map_file_name(), polynomial_level_file_name(), libMesh::ParallelObject::processor_id(), libMesh::DofObject::processor_id(), libMesh::Xdr::reading(), libMesh::DofObject::set_id(), libMesh::MeshBase::set_mesh_dimension(), libMesh::Elem::set_node(), libMesh::Elem::set_refinement_flag(), libMesh::DofObject::set_unique_id(), libMesh::Elem::subdomain_id(), subdomain_map_file_name(), libMesh::Elem::type_to_n_nodes_map, and version_at_least_0_9_2().

Referenced by read().

◆ read_serialized_edge_bcs()

|

private |

Read the edge boundary conditions for a parallel, distributed mesh. NEW in 1.1.0 format.

- Returns

- The number of bcs read

Definition at line 1958 of file xdr_io.C.

References read_serialized_bcs_helper(), and value.

Referenced by read().

◆ read_serialized_nodes()

|

private |

Read the nodal locations for a parallel, distributed mesh

Definition at line 1690 of file xdr_io.C.

References _field_width, libMesh::Parallel::Communicator::broadcast(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::Xdr::data_stream(), libMesh::MeshTools::Generation::Private::idx(), io_blksize, libMesh::libmesh_isnan(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshInput< MT >::mesh(), std::min(), n_nodes, libMesh::MeshBase::n_nodes(), libMesh::MeshBase::node_ptr_range(), libMesh::MeshBase::node_ref(), libMesh::ParallelObject::processor_id(), libMesh::Xdr::reading(), libMesh::DofObject::set_unique_id(), and version_at_least_0_9_6().

Referenced by read().

◆ read_serialized_nodesets()

|

private |

Read the nodeset conditions for a parallel, distributed mesh

- Returns

- The number of nodesets read

Definition at line 1974 of file xdr_io.C.

References libMesh::BoundaryInfo::add_node(), libMesh::as_range(), boundary_condition_file_name(), libMesh::Parallel::Communicator::broadcast(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), data, libMesh::Xdr::data_stream(), libMesh::MeshBase::get_boundary_info(), libMesh::MeshTools::Generation::Private::idx(), io_blksize, libMesh::MeshInput< MT >::mesh(), libMesh::MeshInput< MeshBase >::mesh(), std::min(), libMesh::MeshBase::node_ptr_range(), libMesh::ParallelObject::processor_id(), read_serialized_bc_names(), libMesh::Xdr::reading(), and version_at_least_1_3_0().

Referenced by read().

◆ read_serialized_shellface_bcs()

|

private |

Read the "shell face" boundary conditions for a parallel, distributed mesh. NEW in 1.1.0 format.

- Returns

- The number of bcs read

Definition at line 1966 of file xdr_io.C.

References read_serialized_bcs_helper(), and value.

Referenced by read().

◆ read_serialized_side_bcs()

|

private |

Read the side boundary conditions for a parallel, distributed mesh

- Returns

- The number of bcs read

Definition at line 1950 of file xdr_io.C.

References read_serialized_bcs_helper(), and value.

Referenced by read().

◆ read_serialized_subdomain_names()

|

private |

Read subdomain name information - NEW in 0.9.2 format

Definition at line 1442 of file xdr_io.C.

References libMesh::Parallel::Communicator::broadcast(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::MeshInput< MT >::mesh(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::ParallelObject::processor_id(), libMesh::MeshBase::set_subdomain_name_map(), version_at_least_0_9_2(), and version_at_least_1_3_0().

Referenced by read().

◆ set_auto_parallel()

|

inline |

Insist that we should write parallel files if and only if the mesh is an already distributed DistributedMesh.

Definition at line 389 of file xdr_io.h.

References _write_parallel, and _write_serial.

◆ set_n_partitions()

|

inlineprotectedinherited |

Sets the number of partitions in the mesh. Typically this gets done by the partitioner, but some parallel file formats begin "pre-partitioned".

Definition at line 91 of file mesh_input.h.

References libMesh::MeshInput< MT >::mesh().

Referenced by libMesh::Nemesis_IO::read(), and read_header().

◆ set_write_parallel()

|

inline |

Insist that we should/shouldn't write parallel files.

Definition at line 379 of file xdr_io.h.

References _write_parallel, and _write_serial.

◆ skip_comment_lines()

|

protectedinherited |

Reads input from in, skipping all the lines that start with the character comment_start.

Definition at line 179 of file mesh_input.h.

Referenced by libMesh::TetGenIO::read(), and libMesh::UCDIO::read_implementation().

◆ subdomain_map_file_name() [1/2]

|

inline |

Get/Set the subdomain file name.

Definition at line 158 of file xdr_io.h.

References _subdomain_map_file.

Referenced by read_header(), read_serialized_connectivity(), write(), and write_serialized_connectivity().

◆ subdomain_map_file_name() [2/2]

|

inline |

◆ version() [1/2]

|

inline |

Get/Set the version string. Valid version strings:

* "libMesh-0.7.0+" * "libMesh-0.7.0+ parallel" *

If "libMesh" is not detected in the version string the LegacyXdrIO class will be used to read older (pre version 0.7.0) mesh files.

Definition at line 140 of file xdr_io.h.

References _version.

Referenced by read(), version_at_least_0_9_2(), version_at_least_0_9_6(), version_at_least_1_1_0(), version_at_least_1_3_0(), and write().

◆ version() [2/2]

|

inline |

◆ version_at_least_0_9_2()

| bool libMesh::XdrIO::version_at_least_0_9_2 | ( | ) | const |

- Returns

trueif the current file has an XDR/XDA version that matches or exceeds 0.9.2.

As of this version we encode integer field widths, nodesets, subdomain names, boundary names, and element unique_id values (if they exist) into our files.

Definition at line 2153 of file xdr_io.C.

References version().

Referenced by read(), read_header(), read_serialized_bc_names(), read_serialized_connectivity(), and read_serialized_subdomain_names().

◆ version_at_least_0_9_6()

| bool libMesh::XdrIO::version_at_least_0_9_6 | ( | ) | const |

- Returns

trueif the current file has an XDR/XDA version that matches or exceeds 0.9.6.

In this version we add node unique_id values to our files, if they exist.

Definition at line 2162 of file xdr_io.C.

References version().

Referenced by read_serialized_nodes().

◆ version_at_least_1_1_0()

| bool libMesh::XdrIO::version_at_least_1_1_0 | ( | ) | const |

◆ version_at_least_1_3_0()

| bool libMesh::XdrIO::version_at_least_1_3_0 | ( | ) | const |

- Returns

trueif the current file has an XDR/XDA version that matches or exceeds 1.3.0.

In this version we fix handling of uint64_t binary values on Linux, which were previously miswritten as 32 bit via xdr_long.

Definition at line 2177 of file xdr_io.C.

References version().

Referenced by read(), read_serialized_bc_names(), read_serialized_bcs_helper(), read_serialized_nodesets(), and read_serialized_subdomain_names().

◆ write()

|

overridevirtual |

This method implements writing a mesh to a specified file.

Implements libMesh::MeshOutput< MeshBase >.

Definition at line 168 of file xdr_io.C.

References _write_unique_id, libMesh::Parallel::Communicator::barrier(), binary(), boundary_condition_file_name(), libMesh::Xdr::close(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::ENCODE, libMesh::MeshBase::get_boundary_info(), legacy(), libMesh::MeshBase::max_node_id(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshOutput< MT >::mesh(), libMesh::BoundaryInfo::n_boundary_conds(), libMesh::BoundaryInfo::n_edge_conds(), libMesh::MeshBase::n_elem(), libMesh::MeshTools::n_elem(), libMesh::BoundaryInfo::n_nodeset_conds(), libMesh::MeshTools::n_p_levels(), libMesh::BoundaryInfo::n_shellface_conds(), libMesh::MeshBase::n_subdomains(), libMesh::out, partition_map_file_name(), polynomial_level_file_name(), libMesh::ParallelObject::processor_id(), subdomain_map_file_name(), version(), libMesh::WRITE, write_parallel(), write_serialized_connectivity(), write_serialized_edge_bcs(), write_serialized_nodes(), write_serialized_nodesets(), write_serialized_shellface_bcs(), write_serialized_side_bcs(), and write_serialized_subdomain_names().

Referenced by libMesh::ErrorVector::plot_error(), and libMesh::NameBasedIO::write().

◆ write_discontinuous_equation_systems()

|

virtualinherited |

This method implements writing a mesh with discontinuous data to a specified file where the data is taken from the EquationSystems object.

Definition at line 92 of file mesh_output.C.

References libMesh::EquationSystems::build_discontinuous_solution_vector(), libMesh::EquationSystems::build_variable_names(), libMesh::EquationSystems::get_mesh(), and libMesh::out.

Referenced by libMesh::ExodusII_IO::write_timestep_discontinuous().

◆ write_equation_systems()

|

virtualinherited |

This method implements writing a mesh with data to a specified file where the data is taken from the EquationSystems object.

Reimplemented in libMesh::NameBasedIO.

Definition at line 31 of file mesh_output.C.

References libMesh::EquationSystems::build_parallel_solution_vector(), libMesh::EquationSystems::build_solution_vector(), libMesh::EquationSystems::build_variable_names(), libMesh::EquationSystems::get_mesh(), and libMesh::out.

Referenced by libMesh::Nemesis_IO::write_timestep(), and libMesh::ExodusII_IO::write_timestep().

◆ write_nodal_data() [1/2]

|

inlinevirtualinherited |

This method implements writing a mesh with nodal data to a specified file where the nodal data and variable names are provided.

Reimplemented in libMesh::ExodusII_IO, libMesh::Nemesis_IO, libMesh::UCDIO, libMesh::NameBasedIO, libMesh::GmshIO, libMesh::GMVIO, libMesh::VTKIO, libMesh::MEDITIO, libMesh::GnuPlotIO, and libMesh::TecplotIO.

Definition at line 105 of file mesh_output.h.

◆ write_nodal_data() [2/2]

|

virtualinherited |

This method should be overridden by "parallel" output formats for writing nodal data. Instead of getting a localized copy of the nodal solution vector, it is passed a NumericVector of type=PARALLEL which is in node-major order i.e. (u0,v0,w0, u1,v1,w1, u2,v2,w2, u3,v3,w3, ...) and contains n_nodes*n_vars total entries. Then, it is up to the individual I/O class to extract the required solution values from this vector and write them in parallel.

If not implemented, localizes the parallel vector into a std::vector and calls the other version of this function.

Reimplemented in libMesh::Nemesis_IO.

Definition at line 150 of file mesh_output.C.

References libMesh::NumericVector< T >::localize().

◆ write_nodal_data_discontinuous()

|

inlinevirtualinherited |

This method implements writing a mesh with discontinuous data to a specified file where the nodal data and variables names are provided.

Reimplemented in libMesh::ExodusII_IO.

Definition at line 114 of file mesh_output.h.

◆ write_parallel()

|

inline |

Report whether we should write parallel files.

Definition at line 358 of file xdr_io.h.

References _write_parallel, _write_serial, libMesh::MeshBase::is_serial(), libMesh::MeshInput< MeshBase >::mesh(), and libMesh::MeshOutput< MT >::mesh().

Referenced by write().

◆ write_serialized_bc_names()

|

private |

Write boundary names information (sideset and nodeset) - NEW in 0.9.2 format

Definition at line 1210 of file xdr_io.C.

References libMesh::Xdr::data(), libMesh::BoundaryInfo::get_nodeset_name_map(), libMesh::BoundaryInfo::get_sideset_name_map(), and libMesh::ParallelObject::processor_id().

Referenced by write_serialized_bcs_helper(), and write_serialized_nodesets().

◆ write_serialized_bcs_helper()

|

private |

Helper function used in write_serialized_side_bcs, write_serialized_edge_bcs, and write_serialized_shellface_bcs.

Definition at line 993 of file xdr_io.C.

References libMesh::as_range(), bc_id, libMesh::BoundaryInfo::boundary_ids(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::Xdr::data_stream(), libMesh::BoundaryInfo::edge_boundary_ids(), libMesh::Parallel::Communicator::gather(), libMesh::MeshBase::get_boundary_info(), libMesh::MeshTools::Generation::Private::idx(), libMesh::BoundaryInfo::invalid_id, libMesh::MeshBase::local_level_elements_begin(), libMesh::MeshBase::local_level_elements_end(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshOutput< MT >::mesh(), libMesh::ParallelObject::n_processors(), libMesh::ParallelObject::processor_id(), libMesh::Parallel::Communicator::receive(), libMesh::Parallel::Communicator::send(), libMesh::BoundaryInfo::shellface_boundary_ids(), write_serialized_bc_names(), and libMesh::Xdr::writing().

Referenced by write_serialized_edge_bcs(), write_serialized_shellface_bcs(), and write_serialized_side_bcs().

◆ write_serialized_connectivity()

|

private |

Write the connectivity for a parallel, distributed mesh

Definition at line 351 of file xdr_io.C.

References _write_unique_id, libMesh::as_range(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), data, libMesh::Xdr::data_stream(), libMesh::Parallel::Communicator::gather(), libMesh::MeshBase::local_level_elements_begin(), libMesh::MeshBase::local_level_elements_end(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshOutput< MT >::mesh(), libMesh::MeshTools::n_active_levels(), libMesh::MeshBase::n_elem(), libMesh::MeshTools::n_elem(), n_nodes, libMesh::ParallelObject::n_processors(), pack_element(), partition_map_file_name(), polynomial_level_file_name(), libMesh::ParallelObject::processor_id(), libMesh::Parallel::pull_parallel_vector_data(), libMesh::Parallel::Communicator::receive(), libMesh::Parallel::Communicator::send(), subdomain_map_file_name(), libMesh::Parallel::Communicator::sum(), and libMesh::Xdr::writing().

Referenced by write().

◆ write_serialized_edge_bcs()

|

private |

Write the edge boundary conditions for a parallel, distributed mesh. NEW in 1.1.0 format.

Definition at line 1122 of file xdr_io.C.

References write_serialized_bcs_helper().

Referenced by write().

◆ write_serialized_nodes()

|

private |

Write the nodal locations for a parallel, distributed mesh

Definition at line 710 of file xdr_io.C.

References libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::Xdr::data_stream(), libMesh::Parallel::Communicator::gather(), libMesh::Parallel::Communicator::get_unique_tag(), libMesh::MeshTools::Generation::Private::idx(), io_blksize, libMesh::MeshBase::local_node_ptr_range(), libMesh::MeshBase::max_node_id(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshOutput< MT >::mesh(), std::min(), libMesh::ParallelObject::n_processors(), libMesh::ParallelObject::processor_id(), libMesh::Parallel::Communicator::receive(), libMesh::Parallel::Communicator::send(), and libMesh::Parallel::wait().

Referenced by write().

◆ write_serialized_nodesets()

|

private |

Write the boundary conditions for a parallel, distributed mesh

Definition at line 1136 of file xdr_io.C.

References bc_id, libMesh::BoundaryInfo::boundary_ids(), libMesh::ParallelObject::comm(), libMesh::Xdr::data(), libMesh::Xdr::data_stream(), libMesh::Parallel::Communicator::gather(), libMesh::MeshBase::get_boundary_info(), libMesh::MeshTools::Generation::Private::idx(), libMesh::BoundaryInfo::invalid_id, libMesh::MeshBase::local_node_ptr_range(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshOutput< MT >::mesh(), libMesh::ParallelObject::n_processors(), libMesh::ParallelObject::processor_id(), libMesh::Parallel::Communicator::receive(), libMesh::Parallel::Communicator::send(), write_serialized_bc_names(), and libMesh::Xdr::writing().

Referenced by write().

◆ write_serialized_shellface_bcs()

|

private |

Write the "shell face" boundary conditions for a parallel, distributed mesh. NEW in 1.1.0 format.

Definition at line 1129 of file xdr_io.C.

References write_serialized_bcs_helper().

Referenced by write().

◆ write_serialized_side_bcs()

|

private |

Write the side boundary conditions for a parallel, distributed mesh

Definition at line 1115 of file xdr_io.C.

References write_serialized_bcs_helper().

Referenced by write().

◆ write_serialized_subdomain_names()

|

private |

Write subdomain name information - NEW in 0.9.2 format

Definition at line 313 of file xdr_io.C.

References libMesh::Xdr::data(), libMesh::MeshBase::get_subdomain_name_map(), libMesh::MeshInput< MeshBase >::mesh(), libMesh::MeshOutput< MT >::mesh(), and libMesh::ParallelObject::processor_id().

Referenced by write().

Member Data Documentation

◆ _bc_file_name

|

private |

Definition at line 342 of file xdr_io.h.

Referenced by boundary_condition_file_name().

◆ _binary

|

private |

◆ _communicator

|

protectedinherited |

Definition at line 107 of file parallel_object.h.

Referenced by libMesh::EquationSystems::build_parallel_elemental_solution_vector(), libMesh::EquationSystems::build_parallel_solution_vector(), libMesh::ParallelObject::comm(), libMesh::ParallelObject::n_processors(), libMesh::ParallelObject::operator=(), and libMesh::ParallelObject::processor_id().

◆ _field_width

|

private |

Definition at line 340 of file xdr_io.h.

Referenced by read(), read_header(), and read_serialized_nodes().

◆ _is_parallel_format

|

protectedinherited |

Flag specifying whether this format is parallel-capable. If this is false (default) I/O is only permitted when the mesh has been serialized.

Definition at line 159 of file mesh_output.h.

Referenced by libMesh::FroIO::write(), libMesh::PostscriptIO::write(), and libMesh::EnsightIO::write().

◆ _legacy

|

private |

◆ _p_level_file

|

private |

Definition at line 345 of file xdr_io.h.

Referenced by polynomial_level_file_name().

◆ _partition_map_file

|

private |

Definition at line 343 of file xdr_io.h.

Referenced by partition_map_file_name().

◆ _serial_only_needed_on_proc_0

|

protectedinherited |

Flag specifying whether this format can be written by only serializing the mesh to processor zero

If this is false (default) the mesh will be serialized to all processors

Definition at line 168 of file mesh_output.h.

◆ _subdomain_map_file

|

private |

Definition at line 344 of file xdr_io.h.

Referenced by subdomain_map_file_name().

◆ _version

|

private |

◆ _write_parallel

|

private |

Definition at line 338 of file xdr_io.h.

Referenced by set_auto_parallel(), set_write_parallel(), and write_parallel().

◆ _write_serial

|

private |

Definition at line 337 of file xdr_io.h.

Referenced by set_auto_parallel(), set_write_parallel(), and write_parallel().

◆ _write_unique_id

|

private |

Definition at line 339 of file xdr_io.h.

Referenced by write(), and write_serialized_connectivity().

◆ elems_of_dimension

|

protectedinherited |

A vector of bools describing what dimension elements have been encountered when reading a mesh.

Definition at line 97 of file mesh_input.h.

Referenced by libMesh::GMVIO::_read_one_cell(), libMesh::UNVIO::elements_in(), libMesh::UNVIO::max_elem_dimension_seen(), libMesh::AbaqusIO::max_elem_dimension_seen(), libMesh::AbaqusIO::read(), libMesh::Nemesis_IO::read(), libMesh::ExodusII_IO::read(), libMesh::GMVIO::read(), libMesh::VTKIO::read(), libMesh::AbaqusIO::read_elements(), libMesh::UCDIO::read_implementation(), libMesh::UNVIO::read_implementation(), and read_serialized_connectivity().

◆ io_blksize

|

staticprivate |

Define the block size to use for chunked IO.

Definition at line 350 of file xdr_io.h.

Referenced by read_serialized_bcs_helper(), read_serialized_connectivity(), read_serialized_nodes(), read_serialized_nodesets(), and write_serialized_nodes().

The documentation for this class was generated from the following files:

generated by